Prerequisites

- AzureRM PowerShell modules (specifically AzureRM.Resources)

- Azure Active Directory administrative access

- Optional: Download/clone my repo

Creating a self-signed certificate

Disclaimer: Most of the scaffolding of the certification code is copied from other blog posts. There are some tweaks that are my own.I’ve created/modified a PowerShell script which creates a new self signed certificate with a 1 year validity starting from the date you run the script. You could increase this is you want, but I recommend using a certificate rollover strategy instead of relying on certificates with infinite period of validity. When you’ve got a proper certificate strategy it doesn’t really matter if it’s 1 year, 2 years, 3 months, or whatever, it’s something that should be automated and easy to maintain. The steps to the script are as follows:

- Specify an FQDN, and use something descriptive (“MSDYN365 cert” is not really descriptive)

- Enter a password used to encrypt exported certificate PFX

- Enter path to store exported PFX

- Enter desired AAD App name

- Enter desired AAD App homepage Uri (does not have to be a valid address)

- Enter desired AAD App identifier Uri (does not have to be a valid address)

- Log in to AAD with administrative credentials (need to have permissions to create an AAD app)

- Verify that login is successful.

At this point the following things have happened:

There is a new certificate stored in the personal store ([Win] + [R], type MMC, [CTRL] + [M], select Certificates and add, choose Computer Account, choose local computer, expand Certificates => Personal => Certificates. Here is the new self-signed certificate createdThe certificate has been exported to the folder you entered during the script execution

Now, as an addition, I’m adding the certificate to my personal store just for this test. Just right click that pfx and choose install, and place it in the personal store of your user account.

Adding an application user in Dynamics 365

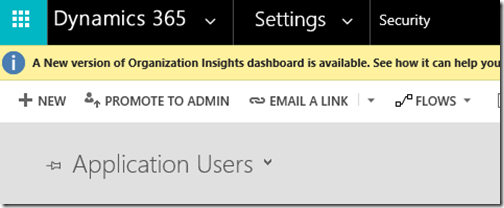

Now we go into Dynamics 365, then go to Settings and Security, and finally open Users.Change the default view to Application Users, then select New from the ribbon.

Add a username and the application id of the newly created AAD application (you can find this through the Azure Portal as well). Additionally add a name and email address. More information about creating application users are found in the Microsoft Docs. When you save the user, the rest of the information will be filled out, which lets you know that it found the application and managed to load the application details from Azure AD.

Finally, give it a security role so it has permissions to do stuff.

Log in to Dynamics 365 using the newly created certificate

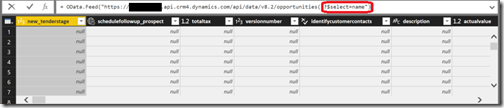

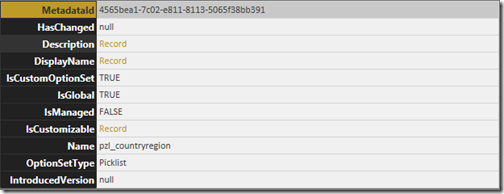

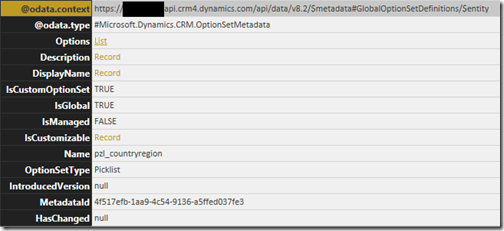

The complete code for this is available on my public github repo found here.First of all, you need to collect the application id and the reply url from the azure ad application registered earlier. Additionally, you have to get the organization URL for your Dynamics 365 organization, and you need to get the certificate thumbprint from the certificate generated in the first step.

Don’t know how to find the signature?

Open opp MMC ([Win]+[R], type MMC and hit ok). Now add the certificate snap-in ([CTRL]+[M], add certificate, choose “My User Account”, hit Finish and OK). Expand personal certificates and find the name of the self signed certificate. Open the certificate information, go to the details tab and scroll down to the bottom where you find the signature. It should look something like this

CODE ALL THE THINGS!

Create a new .net framework console project in visual studio (or just copy/clone my repo), then do the following:- Add nuget package, search for microsoft.crmsdk.xrmtooling.coreassembly

- Open app.config, add the following code into it (inside the <configuration></configuration> section)

<appSettings> <add key="CertificateThumbPrint" value="certificate thumbprint here" /> <add key="ClientId" value="application id from AAD app"/> <add key="RedirectUri" value="redirecturl from AAD app"/> <add key="DynamicsUrl" value="https://<organizationname>.crmX.dynamics.com/"/> </appSettings>

- Add the values that you collected at the beginning of this section into the app config you just created

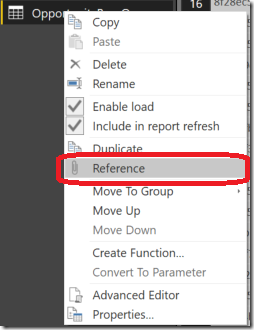

- Add a reference to System.Configuration in your project

Now, if you haven’t cloned or copied my code already, copy the contents of this CS-file into your Program.cs

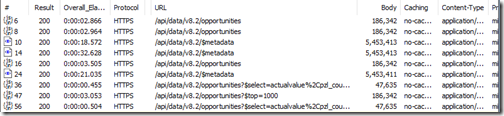

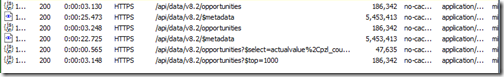

Add a breakpoint at the end of the code, then hit F5 and watch all good things come to fruition:

So what if I have a certificate file?

The wrap-up

Certificate authentication works like a charm with Dynamics 365 Online. If you combine this with certificate storage in Azure Key Vault then you can securely authenticate and integrate with Dynamics365 without having to worry about app user credentials and password expiration (you still have to worry about certificates though, which isn’t really trivial).We might see support for managed service accounts in the future, but for now this is a decent way to prevent the whole password management scheme of application users.